by Lidia Paulinska | Jun 27, 2016

E3, June 2016 – It is a season for Virtual Reality. At E3, the gaming world show that took place in June at the Convention Center in Los Angeles, the VR was presented everywhere. So far, virtual reality is associated with gaming industry sector mostly because the hard-core gaming community is willing to spend large amount of money for special purpose hardware such as VR glasses and games consoles.

VR hardware was the big draw this year. The major platforms that were at the show took the spotlight since there was no major console release. Dominating the major exhibits were the Sony Playstation VR units, Occulus, and Samsung Gear. Dominating the software showcases was dominated by the HTC Vive. Not counting the companies in the private rooms for demos, there were16 companies on the two expo floors with VR hardware or software. These included: Sony, Occulus, Samsung, HTC, Pop up Gaming, Time of VR, Naughty America, CAPCOM, Carl Zeiss, Alienware, Bethesda, Warner Bros, Ubisoft, Cubicle Ninjas, Razer and Nyko.

The computer-simulated reality dates back 77 years. Here are the key moments in VR history. It started in 1939 at the trade show in New York City where introduced View-Master, a stereoscopic alternative to panoramic postcard. After that 30 years passed while Ivan Sutherland came up with first head-mounted display called “The Sword of Diamocles”. It passed another 30 years when the computer games company Sega introduced wrap-around VR glasses at CES in 1993. Two years later Nintendo produced the gaming console and named it Virtual Boy.

The real gold rush for virtual reality started in 2010. The market research analysts from Deloitte, CCS Insight, Barclays and Digi-Capital accordingly forecast that $24 million of VR devices will be sold by 2018, and revenue from virtual and augmented reality products and content will reach to the $150 billion mark by 2020.

by Tom P Kolodziejak | Jun 26, 2016

E3, June 2016 – Every year at E3, the Entertainment Software Association (ESA), that owns and operates the show, issues essential facts about the gaming sector. The ESA conducts business and consumer research, and provides analysis and advocacy on issues like global content protection, intellectual property, technology and e-commerce, is the valuable source for information such as players demographics and games statistics. Below what ESA’s report shows for this year.

Who is playing and what is the players’ demographics?

63% of U.S. households are home to at least one person who plays video games regularly, which is defined as 3 hours or more per week. There are an average of 1.7 gamers in each game-playing U.S. household. 65% of U.S. household own a device that is used to play video games and 48% of U.S. household own a dedicated game console.

According to the ESA the gamer demographics are as followed: the average game player is 35 years old: 27% under 18 years, 29% age is 18-35 years, 18% is 36-49 and 26% is 50 plus. 59% gamers are male and 41% female. The most frequent female game player is on average 44 years old and the average male game player is 35 years old. Women age 18 or older represent a significant greater portion of the game-playing population (31%) than boys age 18 or younger (17%). There is an equal distribution of female video gamers in age. 50% are 35 and under and 50% older than 35. The average number of years gamers have been playing video games is 13.

We know who is playing but who is buying?

The most frequent game purchaser is 38 years old. 60% of them are male and 40% are female. 52% of them feel that video games provide more value for their money that DVDs (23%), music (14%) or going to the movies (10%). Of the most frequent game purchasers: 41% purchase them without having tried them, 31% download the full game off the company’s website and 30% purchase after downloading the trial version or demo. There is no surprise that 95% of gamers who own dedicated game consoles purchase video games for them.

What and how the gamers play?

48% of the most frequent gamers play social games. The top devices the gamers use are PC (56%), dedicated game console (53%), smartphone (36%), wireless devices (31%) and dedicated handheld system (17%). Top three types of video games played on wireless or mobile devices are: puzzle/board game/card game or game show @ 38%; action games @ 6% and strategy games @ 6% as well. The gamers who play multiplayer and online games spend an average of 6.5 hours per week playing with other gamers online, and 4.6 hours per week playing in-person. 51% of gamers play a multiplayer mode at least weekly.

Why gamers play?

53% of gamers feel that video games help them to connect with friends and 42% believe that games help them spend time with family. 75% of gamers believe that playing games provide mental stimulation or education.

by Lidia Paulinska | Jun 14, 2016

GTC, May 2016 – Jen-Hsun Huang, the founder and CEO of Nvidia, one of the largest American manufacture of graphics accelerator chips, at the GPU conference, in May 2016 in California, announced that VR is going to change the way we design and experience the products. Such as shopping for cars. It is like being in virtual showroom where we can walk around our custom design car, open its door and check out the car’s interior.

At that conference took place two amazing virtual reality demos – Everest VR and Mars 2030.

For the “Everest VR” demo, Nvidia, partnered with Solfar, a Nordic VR games company, and RVX, a Nordic visual effects studio for the motion picture industry. RVX worked on movies, such as “Gravity”, which won the Oscar for Visual Effects. Using advanced stereo photogrammetry, pixel by pixel, a CGI (Computer Generated Image) model of Mount Everest was created.

For “Mars 2030” Nvidia worked with the scientists and engineers at NASA, along with Fusin VR, taking images from dozens of satellite flybys of Mars. They reconstructed 8 square kilometers of the surface of the planet. Even the rocks were hand sculptured, with millions of them being carefully placed, based on the satellite images.

Steve Wozniak, a co-founder of Apple, was invited to experience the Mars 2030 demo. As soon he slipped on his headset he was transported to a rover to drive around the planet.

Here are the key moments in VR history.

It started in 1939 at the trade show in New York City where introduced View-Master, a stereoscopic alternative to panoramic postcard. After that 30 years passed while Ivan Sutherland came up with first head-mounted display called “The Sword of Diamocles”. It passed another 30 years when the computer games company Sega introduced wrap-around VR glasses at CES in 1993. Two years later Nintendo produced the gaming console and named it Virtual Boy.

The real gold rush for virtual reality started in 2010.

That year Google came out with 360 degree version of Street View on Google Maps. In 2012 small company Oculus collected $2.4 million for a production of VR glasses. Two years after, Oculus was purchased by Mark Zuckerberg, the founder of Facebook, for $2 billion! The market research analysts from Deloitte, CCS Insight, Barclays and Digi-Capital accordingly forecast that $24 million of VR devices will be sold by 2018, and revenue from virtual and augmented reality products and content will reach to the $150 billion mark.

So far, virtual reality is associated with gaming industry sector mostly because the hard-core gaming community is willing to spend large amount of money for special purpose hardware such as VR glasses and games consoles. But that is changing. VR is appearing in different sectors of business and entertainment.

Yes, it is known that VR may cause motion sickness for some viewers and there are still a number of obstacles to work on but the technology is unstoppable now.

by Lidia Paulinska | Jun 13, 2016

Embedded Vision Summit, May, 2016 – The original title of Larry Matthies, Senior Scientist from NASA Jet Propulsion Lab, was “Using Vision to Enable Autonomous Land, Sea and Air Vehicles”. Matthies added “the space” to emphasize the surroundings of these vehicles. “How I could forget about space”, he noted. When we think about autonomous vehicles, what comes to our minds are the vehicles on the roads but the scientists are continuing to develop the driverless vehicles for sea, air and space.

Matthies mentioned that the primary application domains and main JPL themes for autonomous vehicles are now as follows: 1. Land – all-terrain autonomous mobility; mobile manipulation 2. Sea: USV escort teams: UUVs for subsurface oceanography 3. Space: assembling large structures in Earth orbit 4. Air: Mars precision landing; rotorcraft for Mars and Titan; drone autonomy on Earth.

Then he described some of the capabilities and challenges that the scientists are facing. One important capability is absolute and relative localization. The key challenges in this domain are: appearance variability, lighting, weather, seasonality, moving objects, and fail-safe performance. Localization has been tested on wheeled, tracked, and legged vehicles in both indoor and outdoor settings, as well as with drones, and Mars rovers and landers.

Another capability the speaker discussed is obstacle detection. This capability includes stationary or moving objects, obstacle type identification and classification, and the ability to determine the capacity and feasibility of terrain traffic. Complementing the detection functions are the understanding of other scene semantics as such landmarks signs, destinations, etc., perceiving people and their activities, and perception for grasping.

The challenges facing the observability sensors are non-trivial. Some of the difficult image characteristics include fast motion, variable lighting conditions such as low light, no light, and very wide dynamic range. The environment can have atmospheric conditions such as haze, fog, smoke or precipitation, and can have many difficult object parameters like featureless, specular, and transparent and terrain types such as obstacles in grass, water, snow, ice, mud. Finally, the last challenge that developers must address is the tradeoff between computational costs versus processor power.

by Lidia Paulinska | Jun 13, 2016

Embedded Vision Summit, May, 2016 – Marco Jacobs from Videantis talked about the status, challenges and trends in computer vision for cars. Videantis, which has over 10 years in business, is the number 1 supplier of vision processors. In 2008 company moved into the automotive space.

What is a future of transportation?

Definitely we will be travelling less than today. Typically less than 100 miles in a day. The only autonomous people mover today is an elevator. So, when can we expect autonomous cars on our roads? At CES 2016 the CEO of Bosch answered – “Next decade maybe”. For now we have low speed and parking assistance but highway, exit to exit… around 2020.

The autonomous vehicle development is described in levels that range from L0 to L5. Today in production are levels L0, L1 and L2. L0 is where driver fully operates a vehicle, L1 is where the driver holds wheel or controls the pedals and vehicle steers or controls speed. Finally L2 is where the drivers monitor, at all times, and vehicle drives itself but not 100% safely. Level 3 needs R&D and L4 and L5 is when the paradigm changes.

How does the market look in numbers?

There is 1.2B vehicles on the road, 20 OEMs produce over 1M each year, 100M cars sold each year, 100 Tier 1s creates over $1B revenue ($800B combined), and less than $1T business excluding infrastructure, fuel, and insurance, around 25% of the cost is electronics.

Today, new cars also carry 0.4 cameras. There is an opportunity to increase that number to 10 cameras per car to extend visibility. In L0 vehicles there are rear, surround and mirror cameras. In L2 and L3 there will be rear, surround, mirror and front cameras.

Rear camera functions typically included: wide angle lens, lens dewarp, graphics for guidelines, H.264 compression for transmission over the automotive in-car Ethernet. Vision technology allows for real-time camera calibration, dirty lens detection, parking assistance, cross traffic alert, backover protection and trailer steering assistance.

The surround view typical functions include: image stitching and re-projection. Vision technology in this application offers: structure from motion, automated parking assistance such as marker detection, free parking space detection and obstacle detection, including everything that rear camera vision functions include.

Mirror replacement to cameras on vehicles will reduce drag and expand the driver’s view. Its typical function include: image stitching and lens dewarping, blind spot detection and rear collision warning. Vision technology offers: object detection, optical flow and structure from motion, as well as the basic features found in rear cameras.

Typical function of a front camera that provides control of speed and the steering wheel are: emergency braking, auto cruise control, pedestrian and vehicle detection, lane detection and keeping, traffic sign recognition, headlight control, bicycle recognition (2018) and intersections (2020). Drive monitoring that is in L0 has features as such: driver drowsiness detection, driver distraction detection, airbag deployment, seatbelt adjustment and driver authentication. Vision technology offers: face detect and analysis, as well as driver’s posture detection.

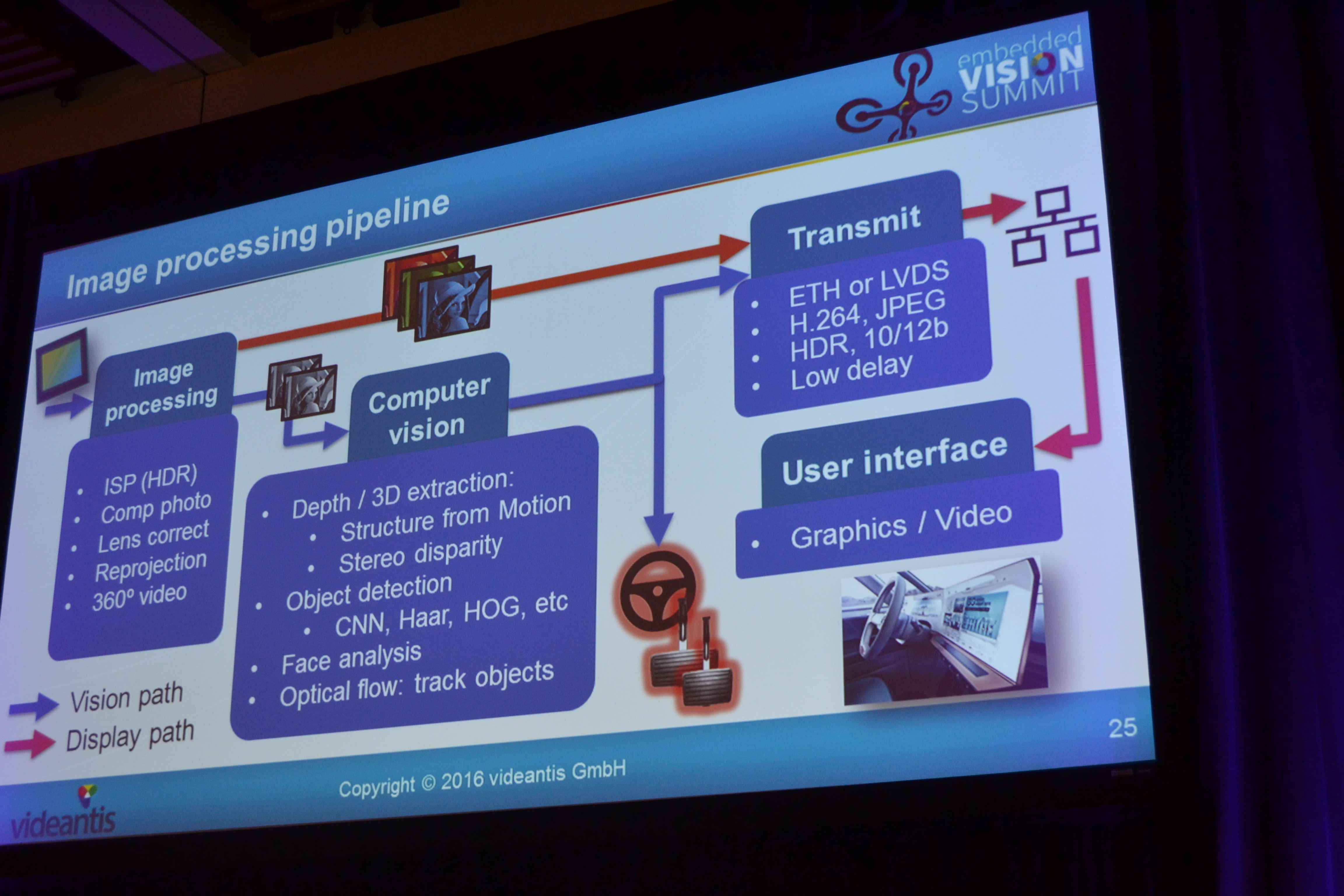

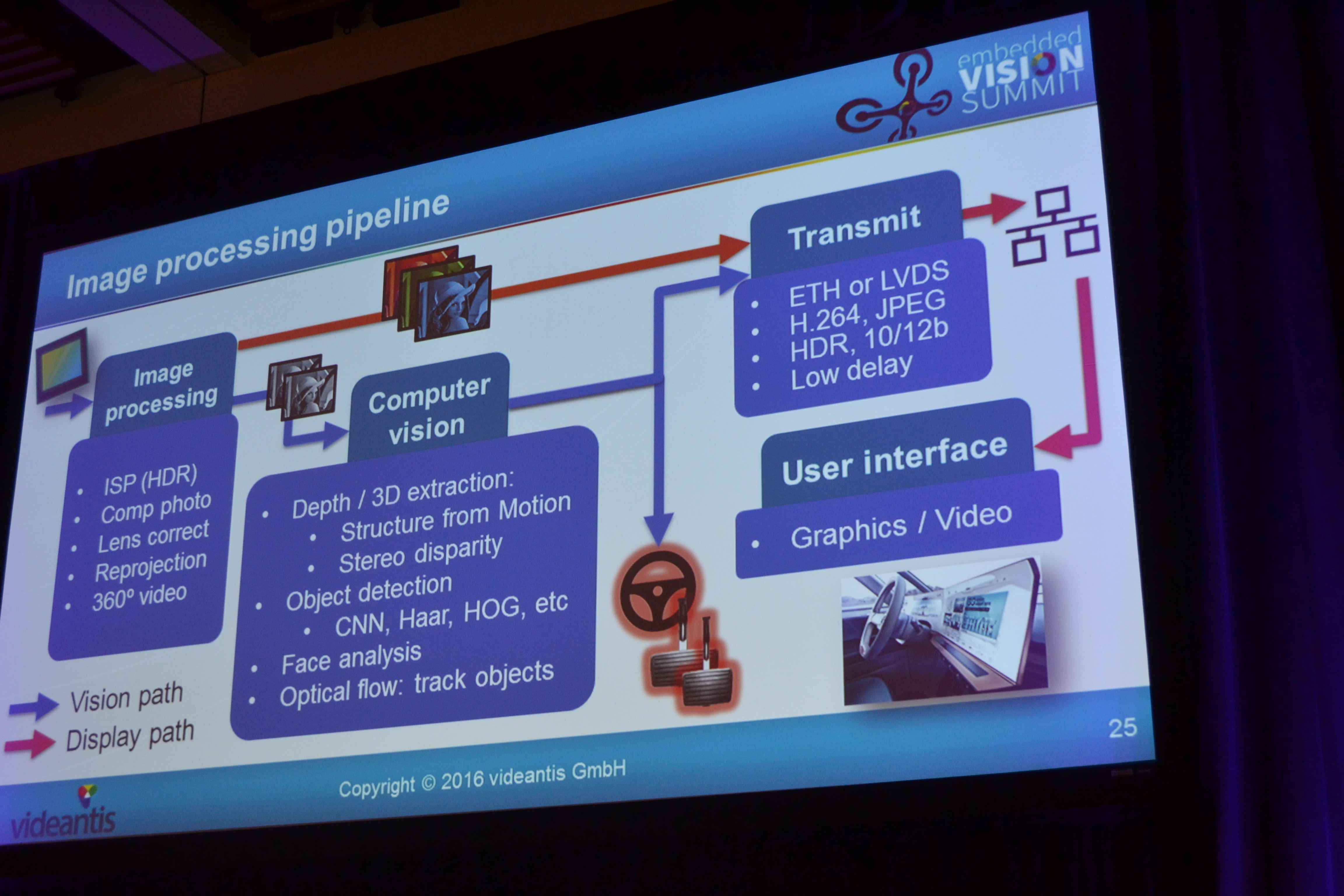

Here is the image processing pipeline:

The speaker mentioned and discussed the challenges like work under all conditions: cold & hot: low power; dark & light: HDR, noise; dirty lens; detect angles; operating over a speed range of 0-120mph: the need to select different algorithms; car loaded/dinged: calibration. Challenge is also working under severe power constraints such as: power source 100W, small form factors limits heat dissipation or complete smart camera less 1W. Another challenge is: what is the better option: centralized or distributed processing, as both have pros and cons. Pros for central processing is that single processing platform eases software development but the cons are: entry-level car also needs high-end head unit; it is not scalable and not modular; adding cameras causes system overload. Distributed processing seems to have more pros such as: low-end head unit, options become plug-and-play, and every camera adds processing capabilities. But the cost is: the system is more complex. The reality today is that some cars have 250 ECUs.

Jacobs’s final conclusions were: the business opportunity is huge (>$1T), self-driving car tech causes paradigm shift (new players can grab market share), automotive is not like consumer electronics, next 10 years no self-driving cars (change will be gradual, lots of driver assist functions with vision technologies; efficient computer vision systems are the key enabler for making our cars safer.