by Lidia Paulinska | Jun 13, 2016

Embedded Vision Summit, May, 2016 – The original title of Larry Matthies, Senior Scientist from NASA Jet Propulsion Lab, was “Using Vision to Enable Autonomous Land, Sea and Air Vehicles”. Matthies added “the space” to emphasize the surroundings of these vehicles. “How I could forget about space”, he noted. When we think about autonomous vehicles, what comes to our minds are the vehicles on the roads but the scientists are continuing to develop the driverless vehicles for sea, air and space.

Matthies mentioned that the primary application domains and main JPL themes for autonomous vehicles are now as follows: 1. Land – all-terrain autonomous mobility; mobile manipulation 2. Sea: USV escort teams: UUVs for subsurface oceanography 3. Space: assembling large structures in Earth orbit 4. Air: Mars precision landing; rotorcraft for Mars and Titan; drone autonomy on Earth.

Then he described some of the capabilities and challenges that the scientists are facing. One important capability is absolute and relative localization. The key challenges in this domain are: appearance variability, lighting, weather, seasonality, moving objects, and fail-safe performance. Localization has been tested on wheeled, tracked, and legged vehicles in both indoor and outdoor settings, as well as with drones, and Mars rovers and landers.

Another capability the speaker discussed is obstacle detection. This capability includes stationary or moving objects, obstacle type identification and classification, and the ability to determine the capacity and feasibility of terrain traffic. Complementing the detection functions are the understanding of other scene semantics as such landmarks signs, destinations, etc., perceiving people and their activities, and perception for grasping.

The challenges facing the observability sensors are non-trivial. Some of the difficult image characteristics include fast motion, variable lighting conditions such as low light, no light, and very wide dynamic range. The environment can have atmospheric conditions such as haze, fog, smoke or precipitation, and can have many difficult object parameters like featureless, specular, and transparent and terrain types such as obstacles in grass, water, snow, ice, mud. Finally, the last challenge that developers must address is the tradeoff between computational costs versus processor power.

by Lidia Paulinska | Jun 13, 2016

Embedded Vision Summit, May, 2016 – Marco Jacobs from Videantis talked about the status, challenges and trends in computer vision for cars. Videantis, which has over 10 years in business, is the number 1 supplier of vision processors. In 2008 company moved into the automotive space.

What is a future of transportation?

Definitely we will be travelling less than today. Typically less than 100 miles in a day. The only autonomous people mover today is an elevator. So, when can we expect autonomous cars on our roads? At CES 2016 the CEO of Bosch answered – “Next decade maybe”. For now we have low speed and parking assistance but highway, exit to exit… around 2020.

The autonomous vehicle development is described in levels that range from L0 to L5. Today in production are levels L0, L1 and L2. L0 is where driver fully operates a vehicle, L1 is where the driver holds wheel or controls the pedals and vehicle steers or controls speed. Finally L2 is where the drivers monitor, at all times, and vehicle drives itself but not 100% safely. Level 3 needs R&D and L4 and L5 is when the paradigm changes.

How does the market look in numbers?

There is 1.2B vehicles on the road, 20 OEMs produce over 1M each year, 100M cars sold each year, 100 Tier 1s creates over $1B revenue ($800B combined), and less than $1T business excluding infrastructure, fuel, and insurance, around 25% of the cost is electronics.

Today, new cars also carry 0.4 cameras. There is an opportunity to increase that number to 10 cameras per car to extend visibility. In L0 vehicles there are rear, surround and mirror cameras. In L2 and L3 there will be rear, surround, mirror and front cameras.

Rear camera functions typically included: wide angle lens, lens dewarp, graphics for guidelines, H.264 compression for transmission over the automotive in-car Ethernet. Vision technology allows for real-time camera calibration, dirty lens detection, parking assistance, cross traffic alert, backover protection and trailer steering assistance.

The surround view typical functions include: image stitching and re-projection. Vision technology in this application offers: structure from motion, automated parking assistance such as marker detection, free parking space detection and obstacle detection, including everything that rear camera vision functions include.

Mirror replacement to cameras on vehicles will reduce drag and expand the driver’s view. Its typical function include: image stitching and lens dewarping, blind spot detection and rear collision warning. Vision technology offers: object detection, optical flow and structure from motion, as well as the basic features found in rear cameras.

Typical function of a front camera that provides control of speed and the steering wheel are: emergency braking, auto cruise control, pedestrian and vehicle detection, lane detection and keeping, traffic sign recognition, headlight control, bicycle recognition (2018) and intersections (2020). Drive monitoring that is in L0 has features as such: driver drowsiness detection, driver distraction detection, airbag deployment, seatbelt adjustment and driver authentication. Vision technology offers: face detect and analysis, as well as driver’s posture detection.

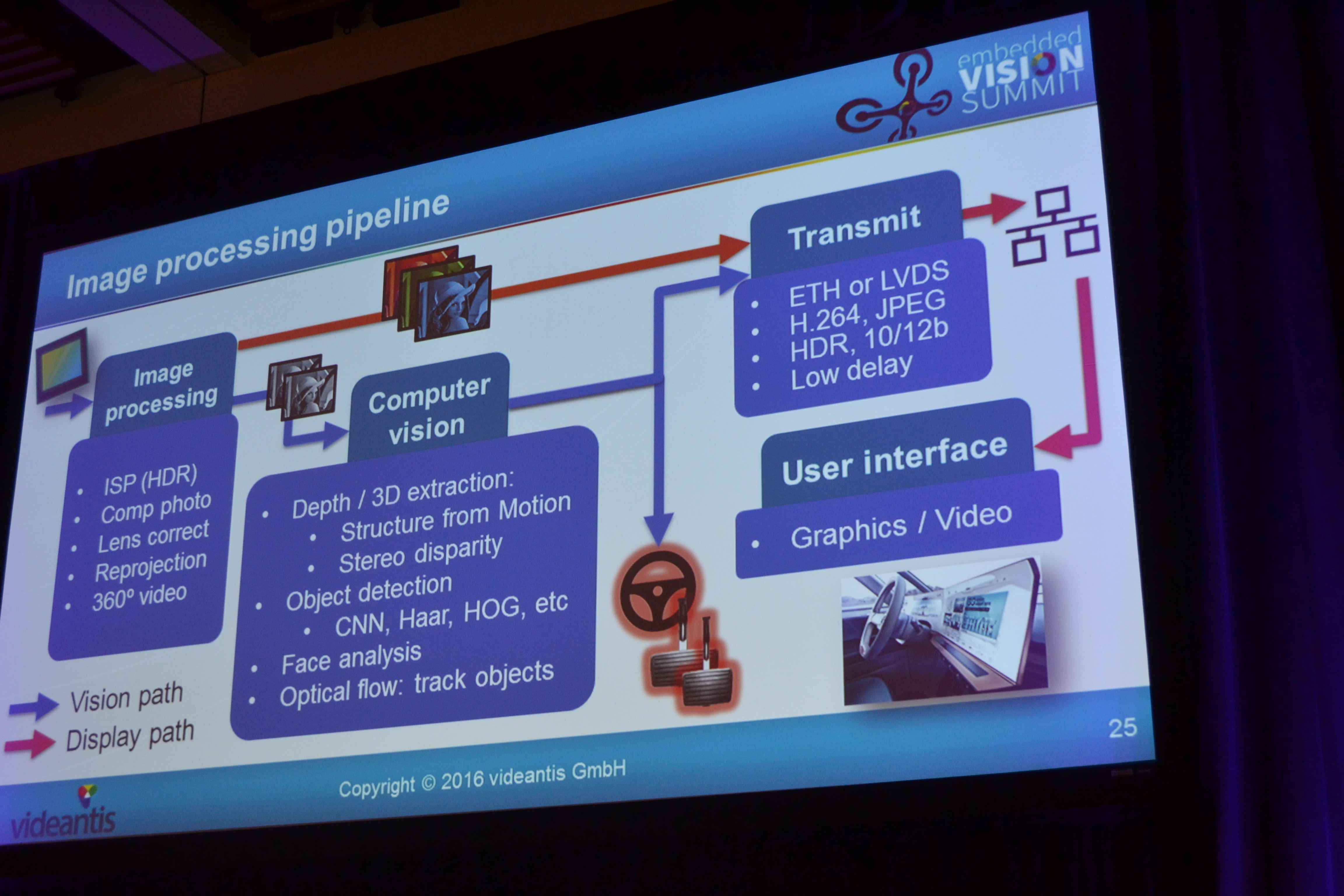

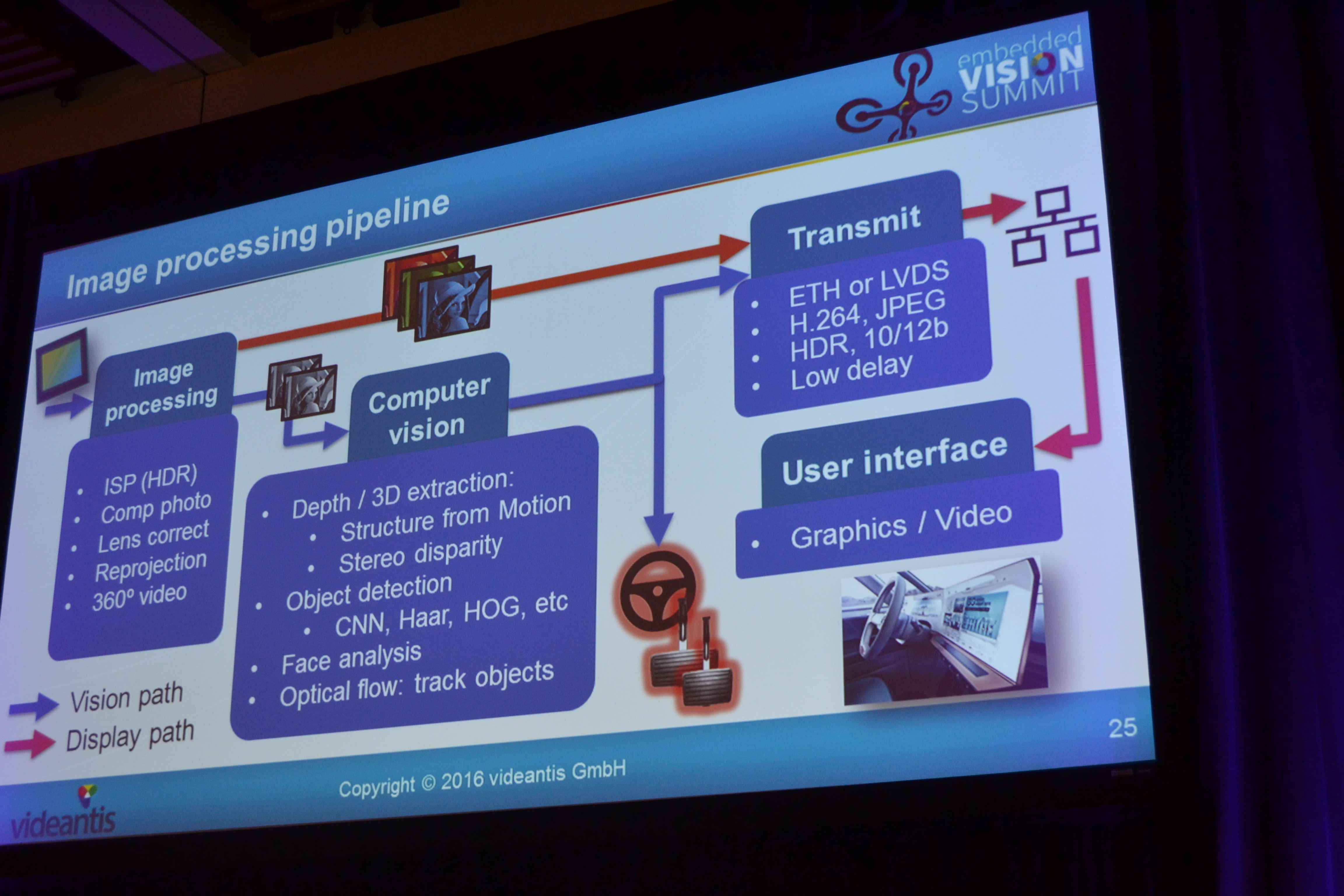

Here is the image processing pipeline:

The speaker mentioned and discussed the challenges like work under all conditions: cold & hot: low power; dark & light: HDR, noise; dirty lens; detect angles; operating over a speed range of 0-120mph: the need to select different algorithms; car loaded/dinged: calibration. Challenge is also working under severe power constraints such as: power source 100W, small form factors limits heat dissipation or complete smart camera less 1W. Another challenge is: what is the better option: centralized or distributed processing, as both have pros and cons. Pros for central processing is that single processing platform eases software development but the cons are: entry-level car also needs high-end head unit; it is not scalable and not modular; adding cameras causes system overload. Distributed processing seems to have more pros such as: low-end head unit, options become plug-and-play, and every camera adds processing capabilities. But the cost is: the system is more complex. The reality today is that some cars have 250 ECUs.

Jacobs’s final conclusions were: the business opportunity is huge (>$1T), self-driving car tech causes paradigm shift (new players can grab market share), automotive is not like consumer electronics, next 10 years no self-driving cars (change will be gradual, lots of driver assist functions with vision technologies; efficient computer vision systems are the key enabler for making our cars safer.