by Lidia Paulinska | Jun 14, 2016

GTC, May 2016 – Jen-Hsun Huang, the founder and CEO of Nvidia, one of the largest American manufacture of graphics accelerator chips, at the GPU conference, in May 2016 in California, announced that VR is going to change the way we design and experience the products. Such as shopping for cars. It is like being in virtual showroom where we can walk around our custom design car, open its door and check out the car’s interior.

At that conference took place two amazing virtual reality demos – Everest VR and Mars 2030.

For the “Everest VR” demo, Nvidia, partnered with Solfar, a Nordic VR games company, and RVX, a Nordic visual effects studio for the motion picture industry. RVX worked on movies, such as “Gravity”, which won the Oscar for Visual Effects. Using advanced stereo photogrammetry, pixel by pixel, a CGI (Computer Generated Image) model of Mount Everest was created.

For “Mars 2030” Nvidia worked with the scientists and engineers at NASA, along with Fusin VR, taking images from dozens of satellite flybys of Mars. They reconstructed 8 square kilometers of the surface of the planet. Even the rocks were hand sculptured, with millions of them being carefully placed, based on the satellite images.

Steve Wozniak, a co-founder of Apple, was invited to experience the Mars 2030 demo. As soon he slipped on his headset he was transported to a rover to drive around the planet.

Here are the key moments in VR history.

It started in 1939 at the trade show in New York City where introduced View-Master, a stereoscopic alternative to panoramic postcard. After that 30 years passed while Ivan Sutherland came up with first head-mounted display called “The Sword of Diamocles”. It passed another 30 years when the computer games company Sega introduced wrap-around VR glasses at CES in 1993. Two years later Nintendo produced the gaming console and named it Virtual Boy.

The real gold rush for virtual reality started in 2010.

That year Google came out with 360 degree version of Street View on Google Maps. In 2012 small company Oculus collected $2.4 million for a production of VR glasses. Two years after, Oculus was purchased by Mark Zuckerberg, the founder of Facebook, for $2 billion! The market research analysts from Deloitte, CCS Insight, Barclays and Digi-Capital accordingly forecast that $24 million of VR devices will be sold by 2018, and revenue from virtual and augmented reality products and content will reach to the $150 billion mark.

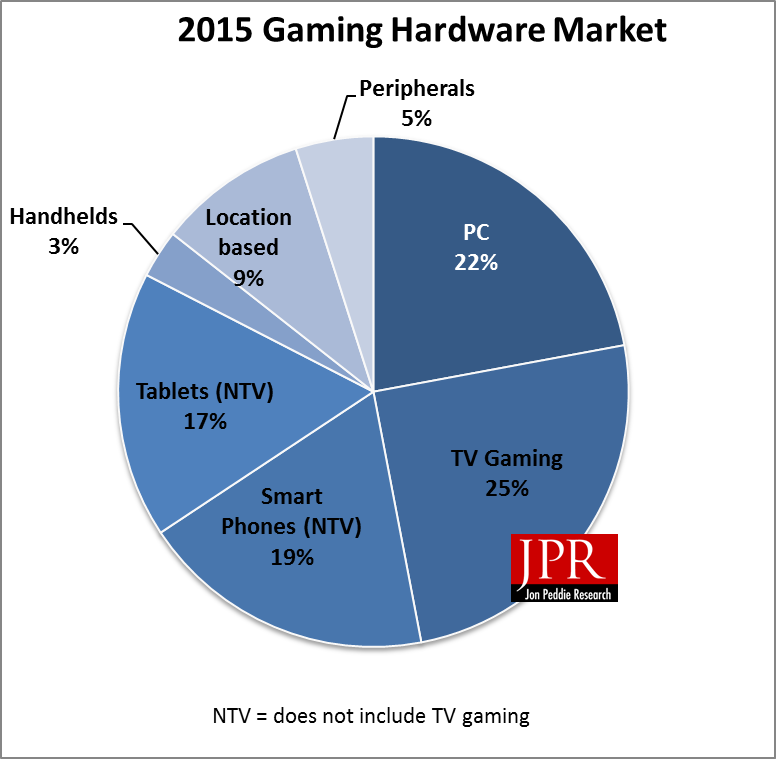

So far, virtual reality is associated with gaming industry sector mostly because the hard-core gaming community is willing to spend large amount of money for special purpose hardware such as VR glasses and games consoles. But that is changing. VR is appearing in different sectors of business and entertainment.

Yes, it is known that VR may cause motion sickness for some viewers and there are still a number of obstacles to work on but the technology is unstoppable now.

by Lidia Paulinska | Jun 13, 2016

Embedded Vision Summit, May, 2016 – The original title of Larry Matthies, Senior Scientist from NASA Jet Propulsion Lab, was “Using Vision to Enable Autonomous Land, Sea and Air Vehicles”. Matthies added “the space” to emphasize the surroundings of these vehicles. “How I could forget about space”, he noted. When we think about autonomous vehicles, what comes to our minds are the vehicles on the roads but the scientists are continuing to develop the driverless vehicles for sea, air and space.

Matthies mentioned that the primary application domains and main JPL themes for autonomous vehicles are now as follows: 1. Land – all-terrain autonomous mobility; mobile manipulation 2. Sea: USV escort teams: UUVs for subsurface oceanography 3. Space: assembling large structures in Earth orbit 4. Air: Mars precision landing; rotorcraft for Mars and Titan; drone autonomy on Earth.

Then he described some of the capabilities and challenges that the scientists are facing. One important capability is absolute and relative localization. The key challenges in this domain are: appearance variability, lighting, weather, seasonality, moving objects, and fail-safe performance. Localization has been tested on wheeled, tracked, and legged vehicles in both indoor and outdoor settings, as well as with drones, and Mars rovers and landers.

Another capability the speaker discussed is obstacle detection. This capability includes stationary or moving objects, obstacle type identification and classification, and the ability to determine the capacity and feasibility of terrain traffic. Complementing the detection functions are the understanding of other scene semantics as such landmarks signs, destinations, etc., perceiving people and their activities, and perception for grasping.

The challenges facing the observability sensors are non-trivial. Some of the difficult image characteristics include fast motion, variable lighting conditions such as low light, no light, and very wide dynamic range. The environment can have atmospheric conditions such as haze, fog, smoke or precipitation, and can have many difficult object parameters like featureless, specular, and transparent and terrain types such as obstacles in grass, water, snow, ice, mud. Finally, the last challenge that developers must address is the tradeoff between computational costs versus processor power.

by Lidia Paulinska | Jun 13, 2016

Embedded Vision Summit, May, 2016 – Marco Jacobs from Videantis talked about the status, challenges and trends in computer vision for cars. Videantis, which has over 10 years in business, is the number 1 supplier of vision processors. In 2008 company moved into the automotive space.

What is a future of transportation?

Definitely we will be travelling less than today. Typically less than 100 miles in a day. The only autonomous people mover today is an elevator. So, when can we expect autonomous cars on our roads? At CES 2016 the CEO of Bosch answered – “Next decade maybe”. For now we have low speed and parking assistance but highway, exit to exit… around 2020.

The autonomous vehicle development is described in levels that range from L0 to L5. Today in production are levels L0, L1 and L2. L0 is where driver fully operates a vehicle, L1 is where the driver holds wheel or controls the pedals and vehicle steers or controls speed. Finally L2 is where the drivers monitor, at all times, and vehicle drives itself but not 100% safely. Level 3 needs R&D and L4 and L5 is when the paradigm changes.

How does the market look in numbers?

There is 1.2B vehicles on the road, 20 OEMs produce over 1M each year, 100M cars sold each year, 100 Tier 1s creates over $1B revenue ($800B combined), and less than $1T business excluding infrastructure, fuel, and insurance, around 25% of the cost is electronics.

Today, new cars also carry 0.4 cameras. There is an opportunity to increase that number to 10 cameras per car to extend visibility. In L0 vehicles there are rear, surround and mirror cameras. In L2 and L3 there will be rear, surround, mirror and front cameras.

Rear camera functions typically included: wide angle lens, lens dewarp, graphics for guidelines, H.264 compression for transmission over the automotive in-car Ethernet. Vision technology allows for real-time camera calibration, dirty lens detection, parking assistance, cross traffic alert, backover protection and trailer steering assistance.

The surround view typical functions include: image stitching and re-projection. Vision technology in this application offers: structure from motion, automated parking assistance such as marker detection, free parking space detection and obstacle detection, including everything that rear camera vision functions include.

Mirror replacement to cameras on vehicles will reduce drag and expand the driver’s view. Its typical function include: image stitching and lens dewarping, blind spot detection and rear collision warning. Vision technology offers: object detection, optical flow and structure from motion, as well as the basic features found in rear cameras.

Typical function of a front camera that provides control of speed and the steering wheel are: emergency braking, auto cruise control, pedestrian and vehicle detection, lane detection and keeping, traffic sign recognition, headlight control, bicycle recognition (2018) and intersections (2020). Drive monitoring that is in L0 has features as such: driver drowsiness detection, driver distraction detection, airbag deployment, seatbelt adjustment and driver authentication. Vision technology offers: face detect and analysis, as well as driver’s posture detection.

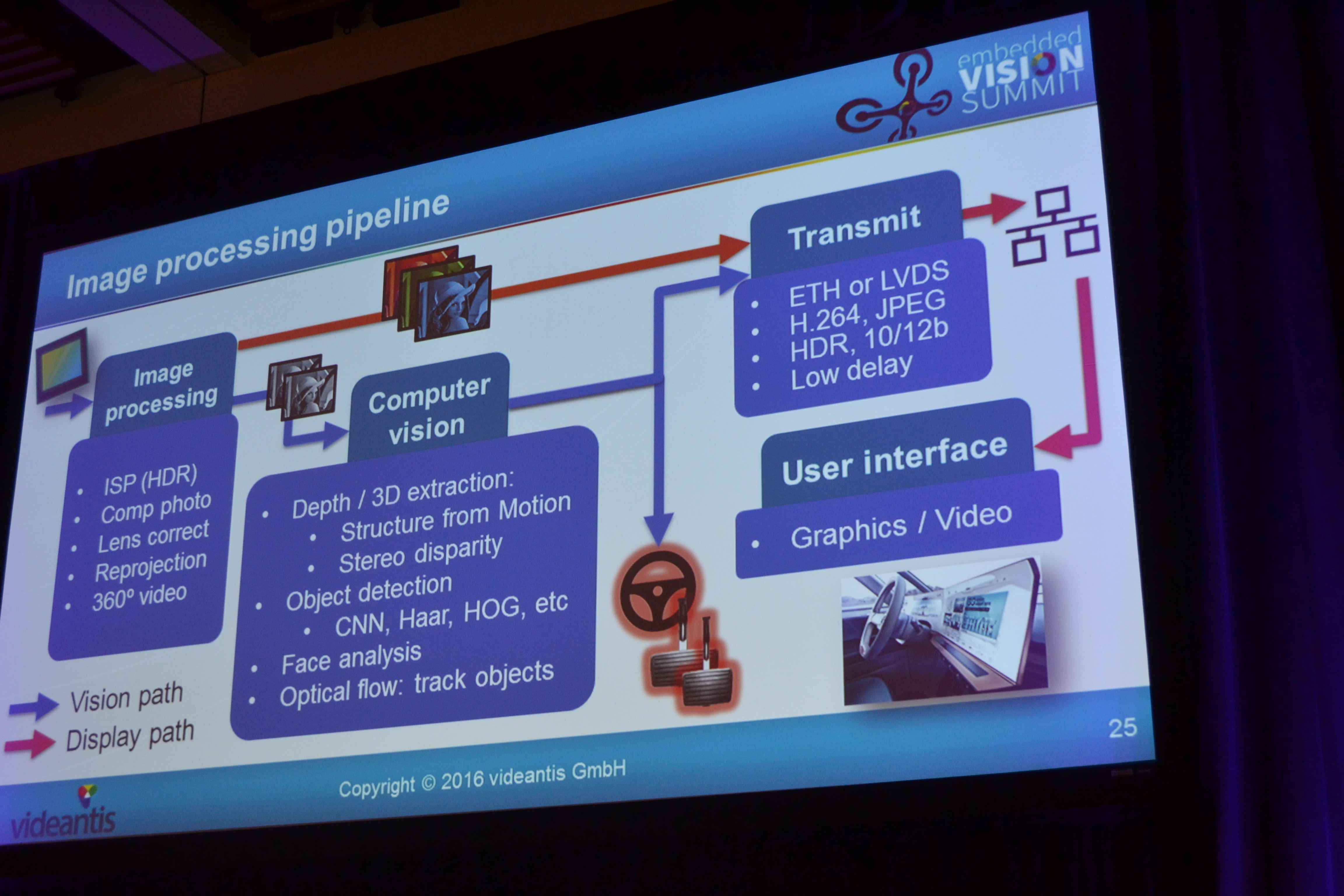

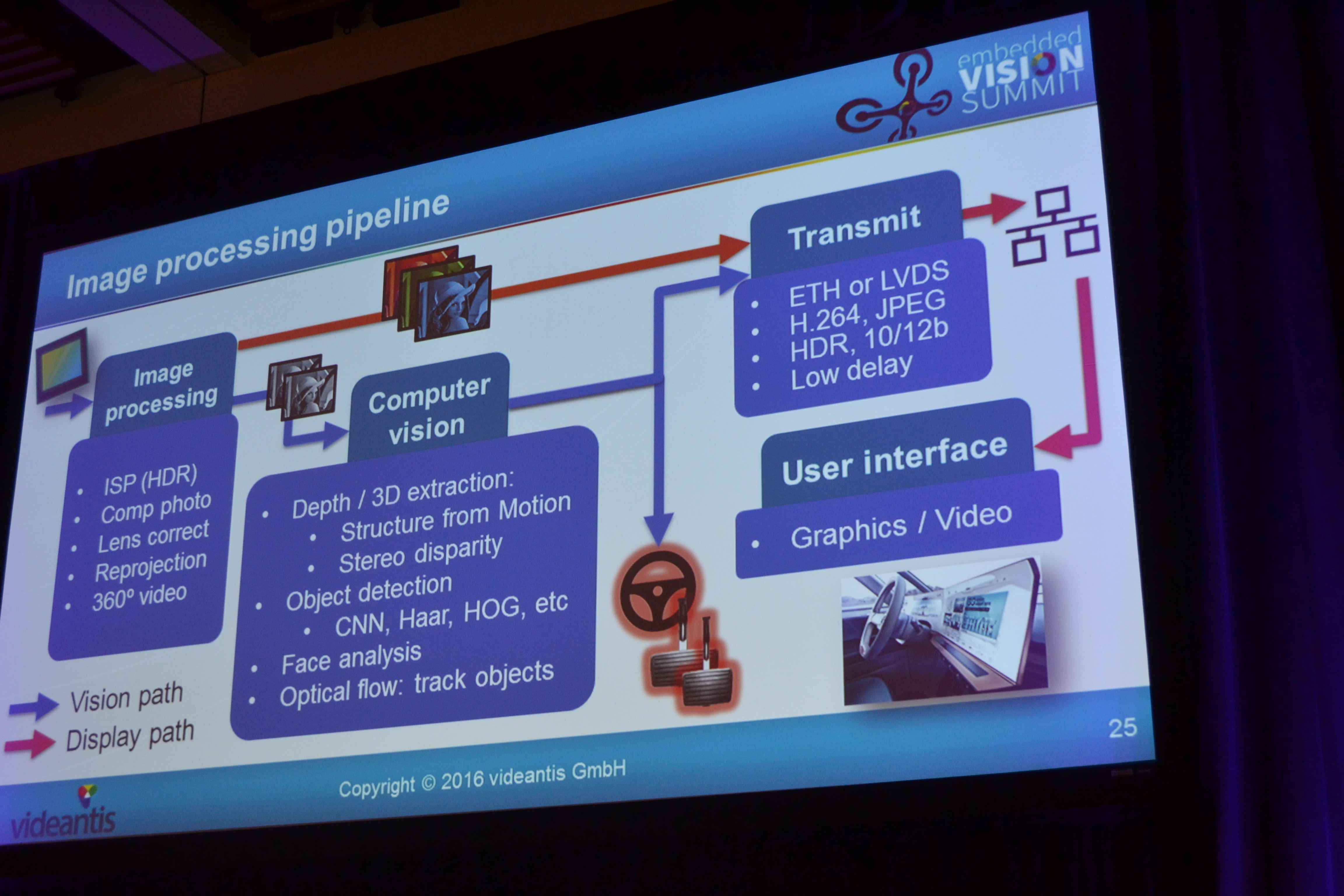

Here is the image processing pipeline:

The speaker mentioned and discussed the challenges like work under all conditions: cold & hot: low power; dark & light: HDR, noise; dirty lens; detect angles; operating over a speed range of 0-120mph: the need to select different algorithms; car loaded/dinged: calibration. Challenge is also working under severe power constraints such as: power source 100W, small form factors limits heat dissipation or complete smart camera less 1W. Another challenge is: what is the better option: centralized or distributed processing, as both have pros and cons. Pros for central processing is that single processing platform eases software development but the cons are: entry-level car also needs high-end head unit; it is not scalable and not modular; adding cameras causes system overload. Distributed processing seems to have more pros such as: low-end head unit, options become plug-and-play, and every camera adds processing capabilities. But the cost is: the system is more complex. The reality today is that some cars have 250 ECUs.

Jacobs’s final conclusions were: the business opportunity is huge (>$1T), self-driving car tech causes paradigm shift (new players can grab market share), automotive is not like consumer electronics, next 10 years no self-driving cars (change will be gradual, lots of driver assist functions with vision technologies; efficient computer vision systems are the key enabler for making our cars safer.

by Lidia Paulinska | Jun 12, 2016

IMS, May 2016 –At the International Microwave Symposium this year a legendary inventor, a father of the cellphone, Dr. Martin Cooper, was speaking at the plenary session on Monday, May 23. In 1973 Dr. Cooper worked for Motorola when he presented the first handheld cellular phone to the world. In a short 10 years later, the “DynaTAC 8000x” the model was released to the market. Today it is hard to believe that first cellphone weighed almost 2 pounds and looked and felt like a brick. The first model that retailed for $3,995 and with its 30 minute of battery charge, it was far away from the functionality and beauty of today’s standards. But since that time, cellular phones have been becoming less expensive and with upgrades and vast technology improvements, the mobile phone was becoming accepted as part of the mainstream life on its way to conquering the world. In the last 20 years, the number of cellular phones rose from 12 million to 5 billion. Dr. Cooper maintains that, although the phone contains amazing semiconductor and other advance technologies, the phone itself is still in its infancy. Personal wireless connectivity has the potential to change our approach to health care and education sectors.

Unfortunately Dr Martin speech was disrupted twice by fire alarms and some time passed by because of the clearance procedures but having this accomplished inventor at IMS was a real treat for the attendees.

by Lidia Paulinska | May 30, 2016

Just two more weeks until the Electronic Entertainment Expo (E3), the world’s premiere trade show for computer and video games, that is being held at the LA Convention Center. More than 20,000 attendees are expected, many new products and announcements but also for the first time the fans and enthusiasts of the electronic entertainment can be a part of that exciting event. E3 Live will be situated at Downtown Los Angeles’ sports and entertainment district of the city and will be open to the ticketed public.

E3 and E3 Live will be taking place from Tuesday, June 14 till Thursday, June 16. Before the doors open for the show let get a glimpse at the state of the industry.

Games industry is the fastest growing sector of the modern entertainment industry and is part of modern culture. Today game enthusiasts expect a richer, fuller and more involving game experience. Under such demanding clientele, more dynamic and multidirectional developments of games have been created, and the pallets of emotions both in the game and experienced by the player, have been greatly increased. Production studios strive for authenticity and put meticulous attention to detail, bringing players into a new level of realism, which may include 3D technology that changes flat images to three-dimensional images and cinematic quality photo-realistic events. Today’s game has a story line, utilizes state of the art visual graphics and utilizes new, advanced form of interaction with the player. In addition there is also increased proliferation of artists in the games industry, who get to express themselves creatively and individually. The impact of the games on mass culture is unquestionable and its value is growing at a dynamic pace.

The big attraction this year is the realization of the hype created by the Virtual Reality (VR) products. Not only is VR in a number of new platforms from companies like Oculus, Sony, Samsung, Razer, HTC and others, but the show will feature a full VR ecosystem. Accessories for controlling the game, walking around and being fully physically immersed in the game, new sensors, games from major publishers and companies as well new experiences from independent developers.

The other major attraction at the show is the availability of accessories and peripherals for the new consoles and high performance PCs released in the past couple of years. The accessories – charging devices, extension cables, headphones, and controllers, performance accessories such as CPU & memory coolers, and skins /decorative items take a little bit to develop after the platforms are released, as they require the software titles to make their market entry before the user community makes the commitment to adopt the large variety of branded items.

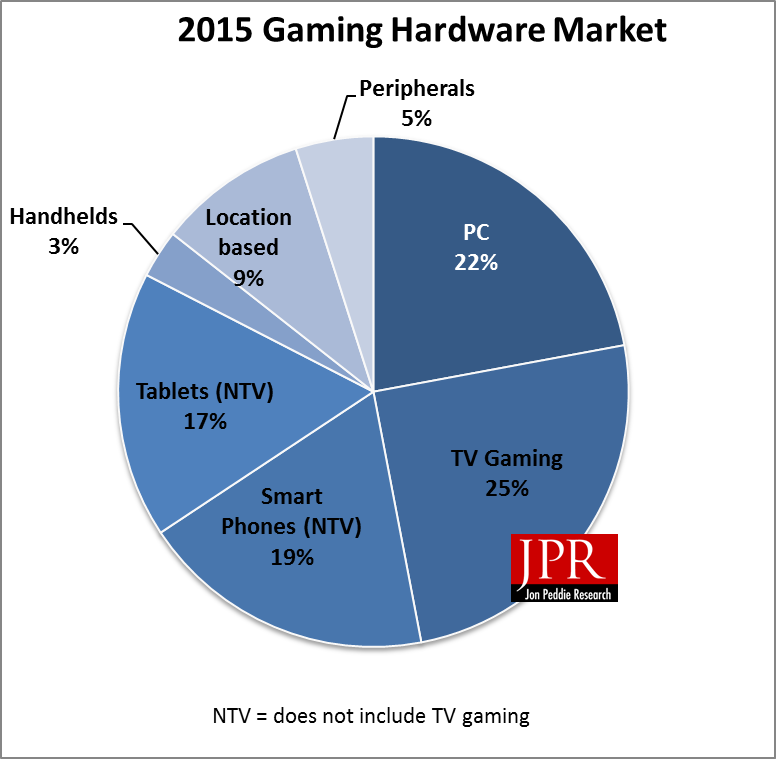

The industry topped $131B USD in sales covering the hardware, software and accessories marketplace.

More info at http://www.e3expo.com/url_takeover

by Lidia Paulinska | May 30, 2016

NAB, April 2016 – OWC is the abbreviation for Other World Computing, one of the longest running 3rd party computer component and storage solutions providers in the personal computer industry. The company started with individual users and small companies and now supports enterprise customers and cloud providers with their products. In a casual and comfortable one on one discussion, we sat down with their CEO and founder to talk about his journey and the company.

It seems like yesterday, said Larry O’Connor, when I asked about his early age of establishing his company. He was 14 years old. Young entrepreneur from Wichita, Illinois. It was in the middle of nowhere – he recalled – “My dad used the computers in his business, he had a TRS 80, so I was exposed to this “staff” in the early age.” He upgraded it.

I do think that was a disruptive business in that time, and I did think I could do it better – he stated.

He recalled, he saw the man coming to their place to do an upgrade on a computer. The technical spent most of his time sitting with the feet on the desk and reading a newspaper. It was then he realized that the paperwork took more time than the effort and process of upgrading the computer. He explained that he then realize, all the folks that need a memory upgrade they don’t need to go to a local computer store. I don’t need to go to the computer store. I can support them and educate them, upgrade it, and even show them how to do it faster and for better price.

In those days, back in the early 1980’s , he found that prices were not changing that quickly and the major computer sale magazines had prices and multiple suppliers for memory, storage and all the accessories. He then found that AOL had an on-line store and chat rooms and an 800 phone number, so his age did not matter, just his knowledge and enthusiasm, to be able sell products. Also, this time was the start of the rise of overnight shipping industry. This was convenient as he could not drive to buy parts or pickup systems for service.

The company started with providing services with known parts going into known computers. He started identifying new components that would improve the performance of the computers his customers had without having them buy a whole new system. He also started to build devices & software that would allow existing parts and peripherals to work with new operating systems and computers.

The driving factor is he cares about the customer, their problems and how to make the computers a productivity tool for them rather than a block in their path they have to put a big effort into learning. He has kept this mentality as the core of the company, and found people with like thinking along the way.

When asked, how did he transition from one person at his parent’s house to running a company that just relocated as they exhausted the local hiring talent pool, he said that he does not feel he runs the company. While people work for him and may report to him, he has to report to everyone at the company and the customers – he is responsible to everyone for what they are doing.

A focus for the company is still on solving customer problems, he sees the future in software to solve problems and expense for customers as well as preserving the value of legacy hardware. He feels that computers and accessories get obsoleted by software applications faster than they stop working and need to be replaced. Software and support can help extend the useful life of a lot of legacy technology for customers at a fair price.

by Lidia Paulinska | May 28, 2016

SID Display Week, May 2016 – Digital signage is used to entice and influence shoppers on their way to the retail stores and shopping malls. Tablets that replacing paper menus, allow restaurant patrons to preview and customize their orders. Engaging visual displays are revolutionizing leisure experiences, promoting increased consumer engagement as e.g. instant replay. Digital signage is a modern communication tool.

At the business session at SID Display Week the presenters: Sanju Khatri, Director of Digital Signage from IHS, Jennifer Davis from Leyard, Karen Robinson from NanoLumens and Paul Apen, Chief Strategy Officer of Eink gave broad overview of digital signage applications that is ambiguous communication tool of our time.

Sanju Khari mentioned needs of outdoor and high-brightness indoor digital signage applications. To address direct sunlight and heat load outdoor displays need to be >2000 nits of brightness, for indoor 1000 nits or higher. Brightness of LCDs can be further enhanced by adding LED backlights and increasing power consumption. However as LED backlights age, they provide fever inputs per watts of power. Cooling mechanisms are essential to maintain lifetime of displays. IP 64 and IP 65 rated. To address harsh and changing weather conditions outdoor displays need to withstand heat, humidity, snow, rain, wind, dust, fumes and dirt. Scratch and impact resistance properties of cover glass is important for both high-brightness indoor and outdoor displays set in high traffic areas. To address heavy usage cycle and vandalism digital signs placed at public settings need additional protection of cover material such as glass or polycarbonate. Important need is also serviceability. Down time of publicly placed outdoor or indoor advertising displays result in negative advertising revenue. Quick turnaround in service and display modules being accessible for on-site repair and maintenance is extremely important.

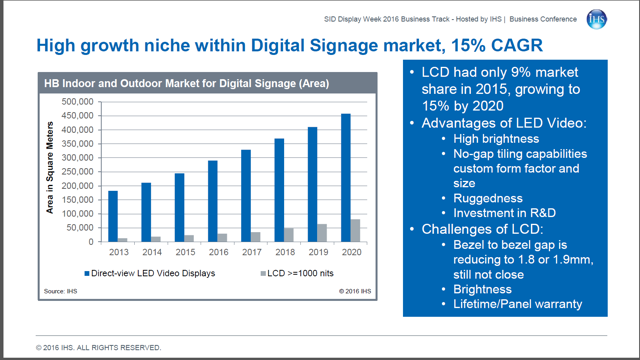

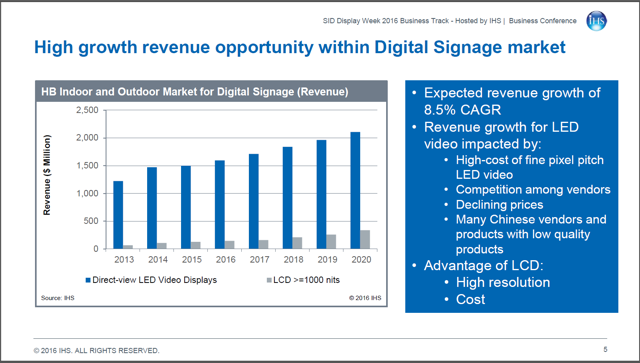

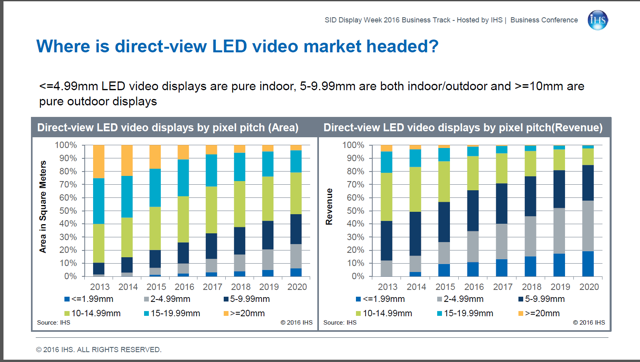

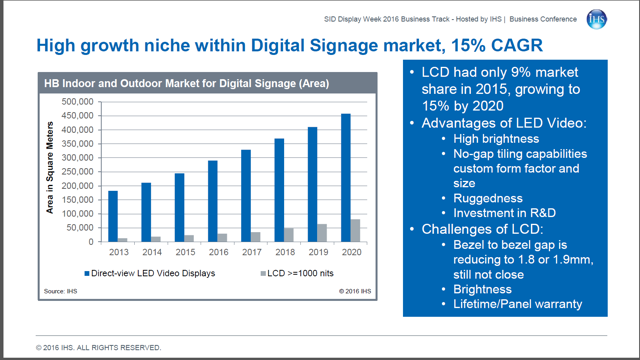

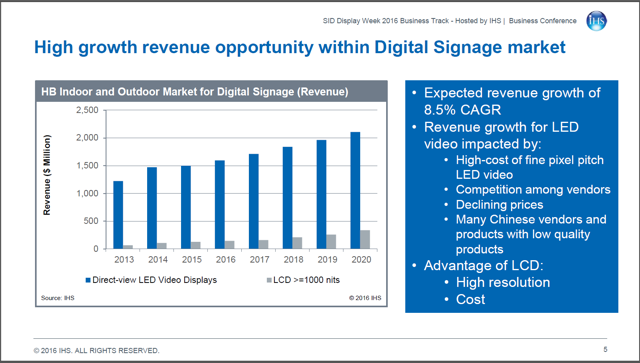

The Digital Signage market in numbers is growing as well as its revenue:

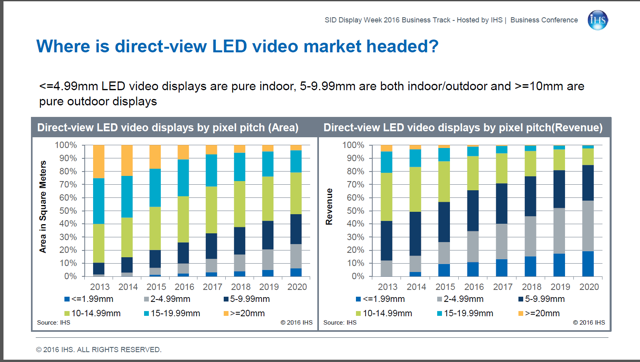

The direct-views LED video displays by pixel pitch in categories of area (square meters) and revenue are portrayed in stats below.

Jennifer Davis from Leyard described the market requirements for digital signage as simple words: Impact and Value – cost effectiveness to the outcome. Those include the features as: seamless (or close to it as they can afford), highly reliable for long-duty cycle applications (all day to 24×7), bright enough and wide enough color to have brand impact, good value.

What are possibilities at LED market?

No doubts that the LED market is expanding, less than 1.9mm pixel pitch grew 279% year in 2015, as well as this area visibility at the related shows such as: ISE, InfoComm China, where more fine-pitch LED offering and vendors were showcasing. The falling costs with the price elasticity makes market growth for LED. Led now is 13% more than rear-projection cubes and 7x the cost of tiled LCD. Down from 200% and 21x two years ago. Davis stated that future grown will be determine by innovations and market maturation.

Karen Robinson from NanoLumens shared her view on current generation that is driven by passion for social media that allows sharing and viewing video. As digital signage is an ambiguous communication tool: displays TV are rising in quality, not just quantity; mobile phone displays continue to improve and Dooh has shifted from vinyl wrapped boards to vibrant LEDs. Display driven generation demands that smartphone and tablet adoption have added new screens. Just as a reminder – in 2000 it was not possible to stream video over a phone. By 2018, over 2.5 billion consumers will enjoy ability to stream video on their smartphones as well as 1.5 billion tablet users worldwide by 2019. Today the average household has 5 or more video-enabled devices. Traditional signage, billboard and display advertising is rapidly shifting to digital and has changing the way we eat, shop, work, play and the way we live. By auto profiling customers and their expected behaviors ahead of their actual presence vendor can quickly customize and personalized the content. Displays become more interactive. Robinson presented few digital displays from worldwide that perfected to create engaging and immersive experiences: American Airlines Arena in Miami, Florida; Holt Renfrew in Ontario, Canada; Mac Cosmetics store in Waikiki, Honolulu, Hawai; Pacific Fair in Broadbeach, Australia; Sport Chek in Toronto, Canada; Telstra in Sydney, Australia and T-Mobile store in New York City, NY. Those examples combine four areas: Architecture, Information, Advertising and Art – she stated.

Paul Apen, Chief Strategy Officer of Eink talked about alternative signage that is ePaper. That display allows outdoor readability, unique form factors and off-grid installations. He stated that it can be used in many areas such as: transportation, conference room, public spaces or retail. The effective signage design should also complement its environment as ePaper does.

Dynamic content management is next thing coming. There will be more relevant content directly to shore, location, and customer. Also economics of the business is allowing the prices to go down. That is the trend now.

by Lidia Paulinska | May 22, 2016

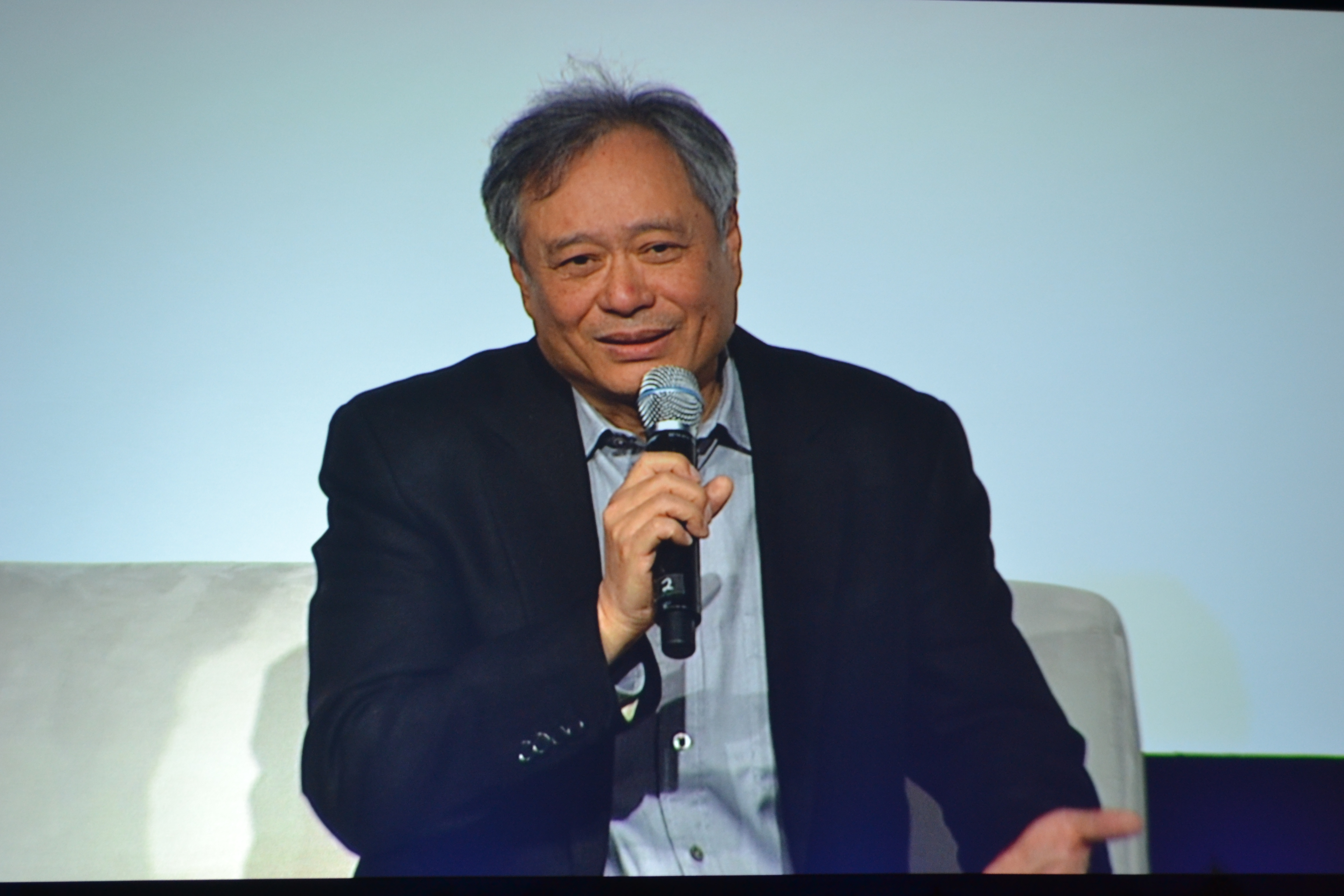

April 2016, NAB – At the start of NAB, SMPTE held the Digital Cinema Summit. The highlight speaker was Ang Lee who spoke following a discussion that HDR was not a tradeoff for future films, but was a technology that must be adopted due to its benefits in the story telling and immersive aspects of films. He spoke on pushing the limits of cinema.

One of the examples was with his film “Life of Pi”. In this film, the story is the enticing element. One aspect of the story was how to visually represent an irrational number and bring it to the screen as an experience. The film used 3D as an extra dimension in this story telling. HDR and HFR are also new technologies that can help with the story telling. HFR (High Frame Rate) has been under a recent resurgence as an alternative the traditional 24fps, and have been championed at NAB and other events by Doug Trumbull. Doug has been advocating 120fps content for both 2D and 3D films. Doug’s latest workflows include cameras, servers and editing flow for support of 3D, HDR, and 4K all at 120fps.

The workflow has over 40x the data of standard film, but produces an entirely different cinema experience. At NAB they had previews of an 11min clip of Ang Lee’s new film ‘Billy Lynn’s Long Halftime Walk,’ that was shot in 4K, HDR, 3D at 120fps. The preview was shown using dual Christie laser projectors and standard surround sound.

The clip provided an exposure to a new level of a “clarity of image” that has not been seen before by cinema audiences. This clarity of image defines a new challenge for the storyteller to be able to utilize this technology and enhance the story being told. The HFR feature also brings new cinematic capabilities to both 2D and 3D films. The HFR aspect also brings a new level of brightness and smoothness to the playback which can be used to enhance cinematic emotions and action without causing viewer fatigue. The overall common experience of the audience after viewing the clip – mostly related to technology and secondarily the cinematic use of the technology was “WOW!”.

by Lidia Paulinska | May 22, 2016

April, NAB – At the end of the day, the market is more and more competitive and we need our customers not only survive but also thrive – stated Charlie Vogt, CEO of Imagine Communications (IC), moderator of the power session “Transformation 2016: Media Technology Using IP, Cloud and Virtualization”. The session that took place at the Imagine Communications booth during NAB show, was featuring the panelists: Antonio Neri, EVP/GM, Enterprise Group, HPE and Steve Guggenheimer, CVP and Chief Evangelist of Microsoft Corporation.

Cloud-based technologies become more and more popular and there is a less speculation and more interest. But as the technology grows in popularity there is complexity involved. The options to choose from, the providers to select in order to put the pieces together. In the broadcast world everyone knows how complex the market is – stated the panelists – we want to make the complexity understandable. The cooperation between IC, Microsoft and HP Enterprise allow the companies to get full solution and reduce their own risk. So the customer does not need to talk to separate partners. It also to avoid confusion what works together etc. and what is not the best fit.

IC, Microsoft and HP Enterprise provide the leaders innovators solution. Microsoft is platform provider. HP is more cloud broker. Prior to NAB Imagine Communication launched 3 important applications, that required months and months of testing if the new solutions work. As Charlie Vogt mentioned IC and it’s partners goal is their customers to thrive versus not just survive. The reason is the move to the cloud, while necessary is scary, the broadcast community has been on premise for over 50 years, and the change is new.

The discussion included that cloud is a partnership – the tools, workflow, software and support all need to work together to provide the reliability and features of the current solution. As it moves to the cloud the partnership with HPE addresses the security aspects of the Azure cloud which are new to the broadcast community.

by Lidia Paulinska | May 21, 2016

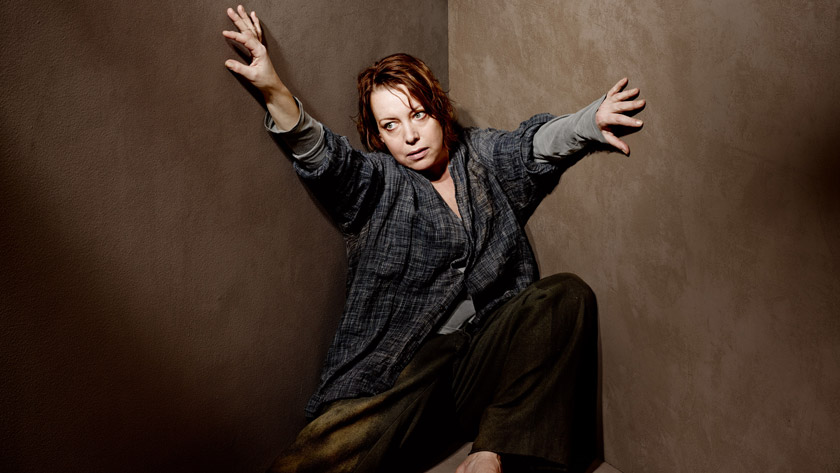

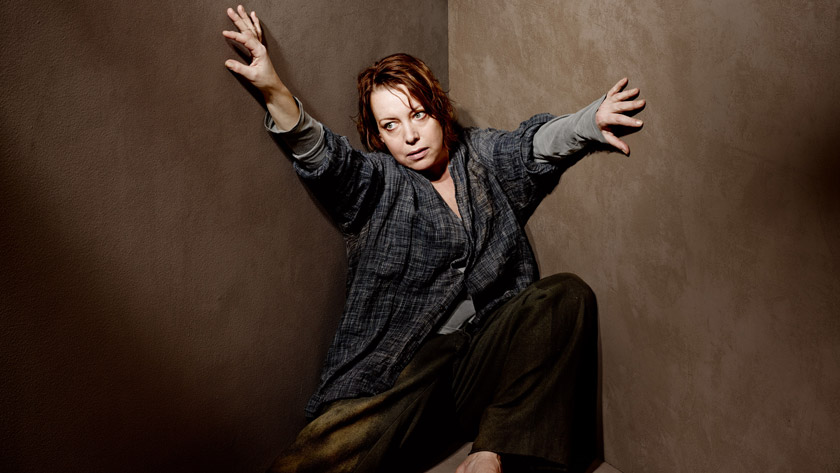

April, Fathom events – On Saturday, April 30, 2016, I had the privilege and pleasure of viewing a screening of a live performance of Richard Strauss’s inexorable one-act opera Elektra,* the concluding operatic work in a year-long 10th anniversary celebration of “MET: LIVE in HD,” which had featured ten of the world’s greatest operas on giant cinema screens throughout the US.

The production premiered at the Aix-en-Provance Festival in France in 2013 and is considered to be a landmark contemporary staging of Strauss’s masterpiece. It was produced by the renowned Patrice Chereau who tragically died shortly after the opening at the age of 68. (A DVD is available of that production.)

The superb cast is headed by the smoldering intensity of soprano Nina Stemme in the title role whose Elektra is unremittingly consumed with a passion for vengeance upon her mother Klytamnestra, widow of Agamennon, performed masterfully by mezzo-soprano Waltraud Meier, and her lover, the cowardly Aegisth, convincingly portrayed by Burchard Ulrich, who have brutally murdered Elektra’s father Agamemnon. Bass-baritone Eric Owens gives a strong rendering of her sympathetic brother Orest and Adrianne Pieczonka rounds out this incomparable cast as her weakling sister Chrysothemis who plays a perfect counterpoint with her banal domestic aspirations to her possessed sister Elektra who has dedicated her life to revenging her father’s murder, She realizes her goal in the end, but at the expense of her sanity.

Staged in an ominously sparse gray space with costumes to match, Chereau’s smoldering rendering of Strauss’s masterpiece is a production for the ages and opera at it’s best!

* * * Significantly, Sigmund Freud used Sophocles’ Elektra in his analysis of a daughter’s attachment to her father, and Oedipus Rex as the basis for his theory of a son’s attraction to his mother. The so-called “Oedipus” and “Elektra” complexes continue to be very much a part of Freudian psychoanalysis.

by Lidia Paulinska and Hugh McMahon

by Lidia Paulinska | May 21, 2016

April, NAB – Two years ago when we started to talk about IP and a question was – Can we do it? A year ago we were asking – Should we do it? This year a question is – How we do it? Steve Reynolds from Imagine Communications was describing the inception of AIMS, Alliance for IP Media Solutions, during our 1:1 interview. Reynolds added that in 2015 a lot of customers came to Imagine Communication with the concerns that they want to do IP but they were worried that is going to be a fragmentation in that segment of the industry. That was clearly need for AIMS.

NAB 2016 was a first year for AIMS, an industry consortium led by broadcast engineers, technologists, visionaries, vendors and business executives dedicated to an open-standards approach that moves broadcast and media companies to a virtualized, IP-based environment.

Reynolds explained that AIMS does not develop specs and standards. AIMS is trade of mind, it is marketing organization – he stated. The goal was to group the companies that primarily are working on broadcast technologies to get together and agree on the common set on the standards we want to use. The standards that are already developed. AIMS focus is on educating, evangelizing and spreading the adoption the technologies, but not to develop specs and standards. We here to help the adoption – he added. When the company joins AIMS it signs the adopter agreement, which says that they will promote this use of technologies, the AIMS roadmap, as you prefer to IP production.

More information about AIMS can be found here: http://aimsalliance.org/